Cloud Architecture Blueprint - Central vs Decentral

Central (“HQ”) vs decentral (“site”) appears on various levels. Are the sites empowered to fine tune their workflows or should all be centralized to save costs? Does each site maintain their own IT for flexibility or should all be identical and managed centrally? These are fundamental business questions without a definitive answer. A central system can never be as flexible as tailored systems in each site. Every site building their own IT means replicating the same infrastructure multiple times instead of sharing one.

With data the question gets even worse, as we have both requirements. The flexibility for the sites and yet the overview across all sites. How can this contradiction be resolved?

We need a solution that is

- Flexible to handle all site’s individual data requirements

- Centralized to achieve a unified view across all sites

- Allows the site to handle their data requirements without breaking the centralized view

- Providing a value to the site in terms of “we get something useful from central” and hence have an intrinsic motivation to provide the data instead of being pressured

Okay, that does not resolve the contradiction, most obviously in the area of the data model. We need a centrally maintained data model every site adheres to, that can be modified by the sites, without breaking others.

Table of Contents

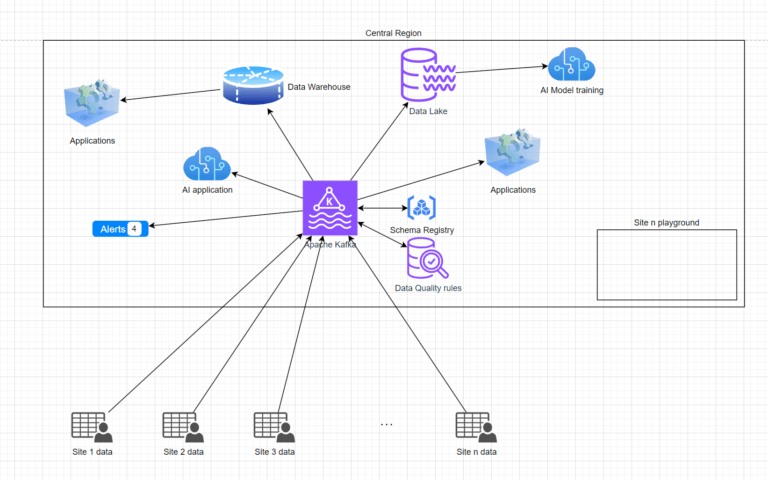

Proposed Architecture (Summary)

In short, all sites produce all data into a central Apache Kafka instance. The payload format is commonly agreed on and contains standardized fields used by all/many sites and a site/region specific extension area. This payload format is stored including documentation in the schema registry.

Incoming data is fed into Data Quality rules to augment the data with the rule results. Consumers can then pick which kind of data, raw or cleansed, to use.

Various systems consume the data as they require: all data, just a specific data domain, data from a few sites only, with low latency, at fixed intervals, just once.

Within the same cloud region are playgrounds to enable the sites building applications themselves, either based on existing services or consuming the produced data directly. Point is, the playground is as open as their own local IT would be – no constraints.

Data Model (Schema registry)

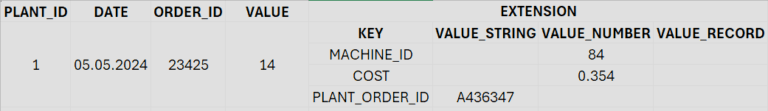

The solution is a data model with a minimum shared tables/columns defined by the HQ and an extensibility concept for sites to augment it with their additional requirements. The Confluent Schema Registry can be used for exactly that.

Let’s visualize that with an example. Factories produce similar or totally different products and the HQ requires an overview across all factories. There will be data that is common to all factories, like material master data, production time, quantity produced, gross profit.

The HQ defines a data model for that, thus communicating what kind of data it desires. This should be the bare minimum, well documented inline and must support schema evolution to enhance the data model over the time. Thanks to the schema evolution the shared data model does not need to be perfect from the start. It can evolve and get better and better with every iteration and it can adjust to new requirements. Kaizen!

The defined data model should have additional structures where sites can add their individual additional data. In the modern world this can be an array attached to each row as a Key-Value store or a custom record.

Table structures in the modern world can not only contain columns with primitive data types but also array and nested structures.

Using this functionality the contradiction got resolved. The HQ defined what fields it expects in a table and all applications using just these must be built just once, e.g. a profit statement report can be viewed by the site for their data only or by HQ to get the full picture.

The site can add custom data because the data model provides a place for these and site specific applications have the in access.

In case the structure evolves and e.g. the COST information is promoted into the central data model, the data is already there and can be copied easily.

Historical attempts to the problem

What is a Schema Registry?

A Schema Registry itself is a web service to store and retrieve schema definition documents, either by name or version. The user can ask “What is the latest version of schema CUSTOMER_MASTER?” and gets back a document describing the columns.

The most popular and advanced document type is Apache Avro. It allows to represent any Json document in a binary format and validate it against a schema. Thus the binary format must only store the schema id, an integer number, and not all field names as in Json. It also supports schema evolution to modify one schema version to the next in a compatible way.

Additional metadata can be added at every level, thus marking the primary key columns, extensive documentation, more precise data type information, etc.

Data Quality rules

The requirement is to increase, ensure and monitor the quality of the data. In technical terms, whenever a new record is provided, it undergoes a series of checks and the result of these checks is stored. As one record can trigger 100 and more checks, the amount of data created can be huge. In the past that meant the results cannot be stored but must be aggregated first. We are living in a Big Data world and Apache Kafka provides all needed. Data produced enters a Kafka topic and a KStream does the quality checks. The result of all checks are added into the same record and consumers can decide to use that information, use the summary or ignore it altogether.

Typically the Data Lake would store all information, thus allow for detailed operational statistics. There we can answer questions like “Which rule was violated how often per week” to get a trend. The DWH contains only the summary, if the record was PASS, WARN or FAIL.

Data Cleansing comes in different flavors and is an important task in the decentral-central data integration. A typical example is that all plants have a production order status field but the value is different – it needs to be standardized. This is one of the most important tasks to create useful reports across all sites!

Data Enrichment means to derive from one information other information, e.g. the sales order number is using different ranges for different sales channels – would be good to add an explicit SALES_CHANNEL column, to provide this knowledge to all users.

Data Validation is a category where incorrect data can be identified but the correct value is now known, e.g. an order about 1234kg is highly unlikely, seems a decimal point is missing.

Data Correction is the term when incorrect data can be identified and corrected with a great confidence. Yes, 525 W Santa Clara St, San Jose, CA is a valid address but the postal code 9511 is definitely wrong, 95113 is the correct one for sure.

Data Matching goes more into merging different records into one. Typical example is a two customer records are about the same person, they were just entered twice. In a decentralized IT does happens constantly when multiple sites have the same vendor and the systems are not connected. This problem in a proactive manner is called Master Data Governance.

To summarize, the data cleansing comes as

- Data Standardisation

- Data Enrichment

- Data Validation

- Data Correction

- Data Matching

Where to cleanse?

Okay, so that is produced, sent to Kafka, Cleansed and distributed to the various target systems or consumed by processes. But that raises a few questions: Why cleanse the data here and not in the source system? What should be done with incorrect data?

Of course it is best if the source systems themselves have a perfect data quality. This comes at a price, however. If a web shop requires customers to enter perfect addresses, e.g. city name must be the official spelling, not San Jose, not SAN JOSE, .. only San José is allowed, this web shop will lose customers during the onboarding.

The conclusion to draw is that data quality depends on the context of its use. For the web shop any address is perfect if the parcel arrives its destination. For a report showing the revenue of customers living in San José, missing the number from all different spellings will lead to wrong results and wrong conclusions – in this context the data quality is bad. Same data, different sentiments about the quality.

Hence the conclusion should be, if the quality can be increased and at the same time the source system can be made more comfortable to use, then yes, by all means, cleanse the data in the source system at data entry time.

In all other circumstances a reactive approach is better. Data can be entered quickly, later a process goes through the rules and does optimize the data in the source data. At least it makes suggestions for a user to accept or dismiss. In case this does not make sense either, the data can be provided with the original and cleansed values to the downstream systems.

This entire topic is especially important in our central/decentral model. As seen with the previous example, central needs 100% standardized data and the decentral locations do not. Central needs globally agreed and unique master data, the sites maintain their own master data. Central has the requirement of higher data quality, hence it implements the process and it cannot simply tell all sites perfect data quality in the global context is to be provided.

Data Quality Process

Treating a project to increase the data quality as a one time job, is the worst possible approach. For that reason the architecture does cleanse all records when they arrive in the Kafka hub.

But all of the cleansing rules create operational metadata on the fly. Metadata that itself is useful and should be monitored and analysed. How often was the validation rule #325 violated? Are the violations growing or decreasing? Is the source of these a single system or equally distributed across all sources? This information is key for the ongoing drive for better data quality. By augmenting the incoming records with this quality metadata, all statistics are possible.

Finally, the output of the operational metadata itself can trigger actions, like sending an email when two records were identified as a potential match-candidate, asking the user to double check and take actions.

Data Governance is a continuous process which gets constantly enhanced. Kaizen again!

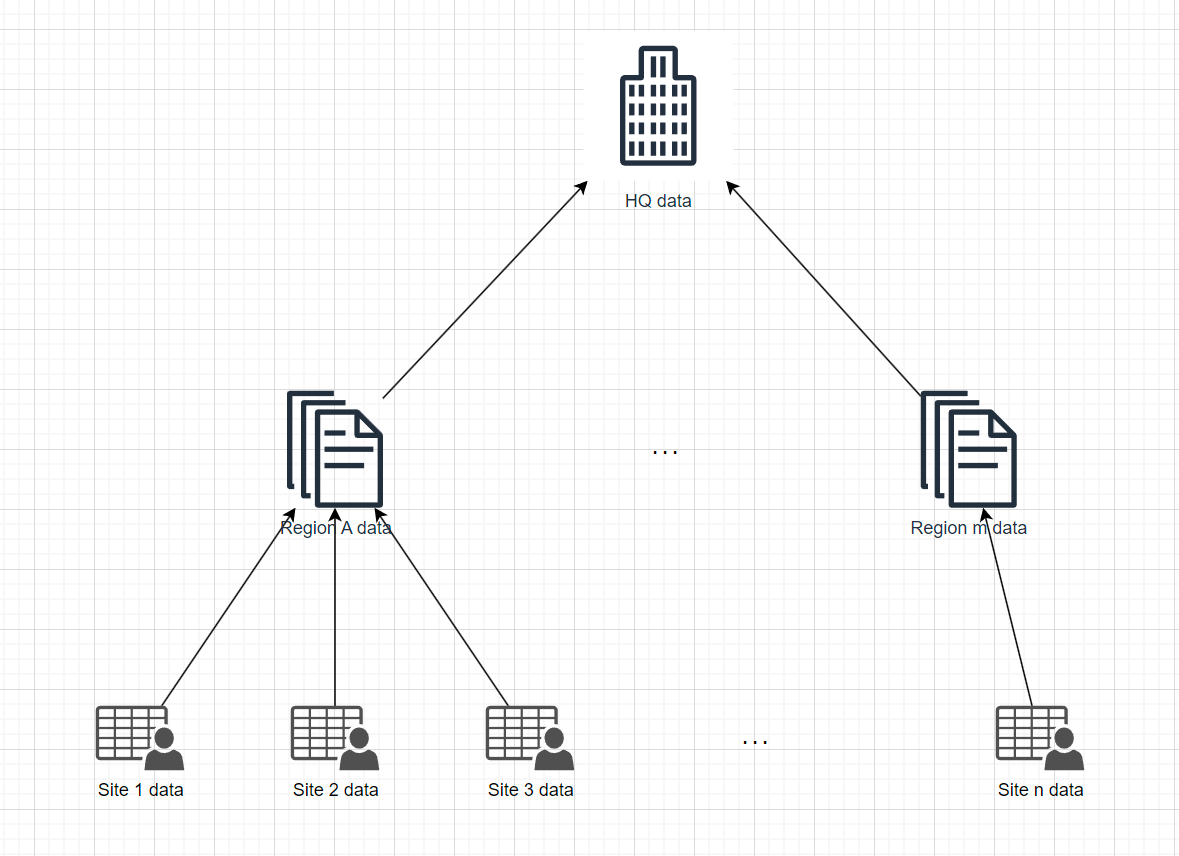

Organization of sites, regions and HQ

Usually the IT systems are grown. A site built something on their own and in their local data center and common data is rolled up to the region and HQ.

The logical consequence is, with each roll up, information is lost. The site has all the details, the regional organization data from multiple sites but with less detail and HW only the summary.

The deployment reflects that as well: Each location has its own database with data it had requested. That can certainly be improved.

The DG Part 1 proposed an extensible data model as solution. This allows to harmonize shared data and yet gives the option to add additional data unique to the site.

Hence the idea should be that each site is just a partition of all data. Regional data is a view spanning a few partitions, HQ is a view of all partitions.

With all data in close proximity, within the same database even, processing one site, multiple sites or all data is very fast. Each site would still have a database schema of its own in this central database to grant the sites a high degree of freedom. The site has access to its data only but can do whatever it likes. For Data Lake storage it is the same partitioned approach with each site owning a directory with their data.

Create new applications, test drive services, run programs, report on all their data.

Further advantages are:

- Whatever had been built by one site can be rolled out to others

- If we allow summary data to be viewed by all sites, this creates the ability to compare business performance with others – a gamification to optimizing the processes

The main downside is the increased latency for users from the other side of the planet. But replicating data 1:1 to other cloud regions is a normal operation and can be done easily, if needed. Combining data from different regions and harmonizing is the difficult bit and the reason for long running integration projects.

Managing the permissions and proper naming conventions are the important building blocks for a successful project. How this is done depends on the services used and the Cloud vendor. Each site has their own accounts with full flexibility and the data is granted via cross account permissions (AWS speak)?