A Data Quality Operating System for today's AI world

Everybody talks about data quality, how it is the foundation of AI and products are being offered. Something for measuring the data quality as part of a data governance solution, a Master Data Management solution, address cleansing features in the ETL tool, etc.

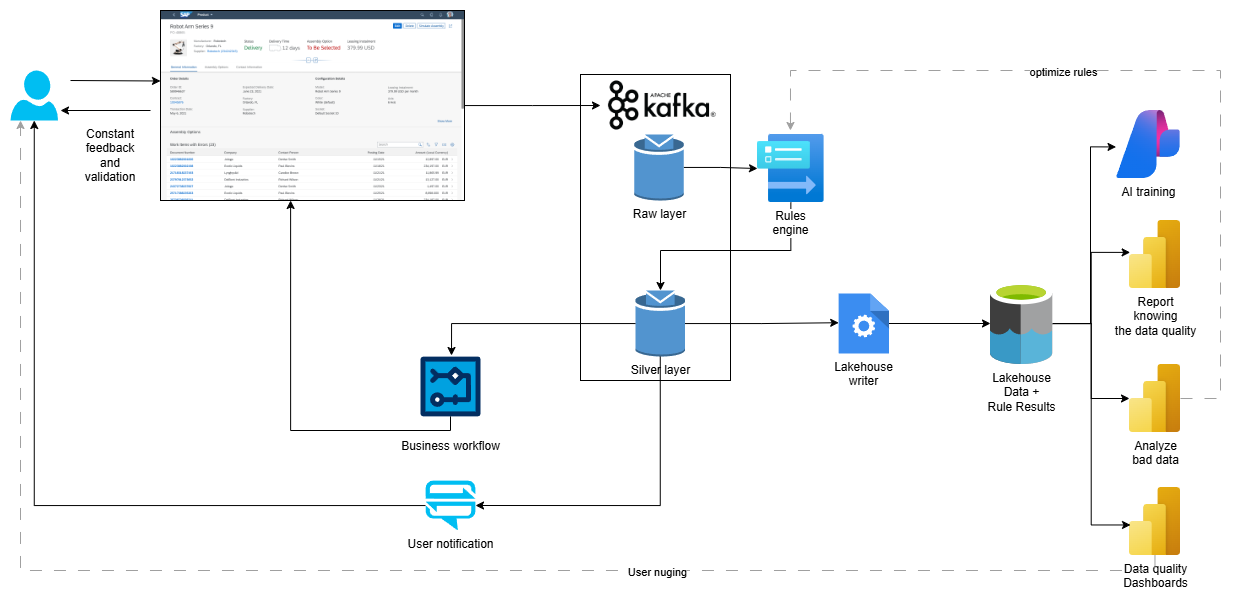

But customers need a complete solution, integrated into their business workflows. I will show how this can be built and that is actually fits very well into a modern data stack.

Learnings from the past

The first time data quality became a big topic was in the context of Data Warehousing. How can you draw the right conclusion, when the data is inconsistent?

Depending on the sentiments, the approach was

- Setup a data quality initiative to cleanse the current data in the source. Wrong, because it is a continuous process.

- Protect the Data Warehouse from bad data. Wrong, because missing data is the worst kind of bad data quality.

- Cleanse the data in the ETL process. Okay, because it is useful, but what data can be cleansed automatically without user interaction? Probably not much.

- Add cleansing rules in the source system. Okay, because why cleanse the data in the Data Warehouse if the same can be done in the source?

- If the data is of higher quality in the Data Warehouse, it should be loaded back into the source system, shouldn’t it? Wrong, because now we assume we can cleanse the data automatically and the result is so good, we know better than the person entering the data. Too risky. And also, why the detour? Add the logic in the source system then.

- Create data quality dashboards in the Data Warehouse. Okay, because reporting data quality metrics and their trend allows to monitor and take action early. But why in the DWH, where the data has been transformed already? Would make more sense to test the raw data.

What we need

What about the following thought:

- Whenever possible, the operational system, should be changed to speed up data entry and increase the data quality at the same time. Knowing well this will not be possible always and will be a larger project of its own.

- A Master Data Management system is a project all by itself. If it exists, it is a normal source.

- Changed data is validated immediately, but outside the source systems and as an asynchronous process. It should not impact the source systems.

- The result of the validation is documented and provided as summary report to management and source system owners, to increase the visibility of problems.

- The validation result triggers workflows, even if it is just an email. If we know the entered data is doubtful, if we can even suggest a correction, if the user has still the case in his head, the chances are good the data will be corrected.

- Enhancements to the data like standardization, deriving information, clustering is needed in the context of the analytical platform only.

- When reporting on the data, the data quality results are shown, to provide a chance for the user to qualify the accuracy of the data before drawing the wrong conclusions.

Above process gives the best result in terms of benefit but also costs and risk. Why? Let’s follow the business process, different personas and the priorities when working with the data.

A customer calls, ordering 1ton of a material. The sales agent enters the minimally required information because both are under time pressure. If the order entry takes too long, we are in danger of losing the customer. The strive for perfect data quality is the least importance. Ask if the customer has already ordered something in the past, pray the caller knows that, check material availability, agree on price and shipment date. Next caller please.

The sales order process has the fundamental checks implemented, but its focus is ease of use.

This data then flows to other systems and occasionally issues are uncovered. These must be fixed after the fact and cost money. The analytical platform is where all data ends up, where data is connected with all other data and where a consistency is required not only within one business object (e.g. one sales order with everything belonging to it, customer, address, materials,…) but in an aggregated manner also. Even if all sales orders are correct, the sum of revenue per customer region might be wrong due to missing region information, different spellings of regions etc.

The analytical platform is the one impacted the most and has the highest data quality requirements.

In an AI driven world, this is even worse. We no longer look at the data, AI agents make decisions based on the data and they have been trained with the data.

That’s like having a basketball player who occasionally misreads the accurate distance to the hoop. In those cases the likelihood for a 3 pointer will be lower – understandable – but during training, this single flawed record does impact all future throws.

It is easy to say, fix all problems in the source. It would make the most sense as it avoids e.g. return shipments due to wrong shipping addresses, mailing catalogs twice and all the other money wasted due to bad data. It is also unrealistic. Hence, report on problems, quantify them, try to fix them in the source but do not rely on that to happen.

The analytical and AI powered systems require a whole different level of data quality. A level the source systems cannot even achieve. Therefore something must be built outside the source systems anyhow.

If doubtful data has been identified, play that information back via a business workflow. But only for those cases where the business process would benefit from. As said, the business workflow needs consistent data within on business object, the analytical platform across all. These are two distinct requirements.

Fits nicely with modern data integration patterns

Modern data integration is about getting changed data once and then distribute it to all the consumers.

Usually that intermediary used for distributing data is Apache Kafka and with that we have the required low latency solution. All that is missing is a rules engine – the rtdi.io rules engine.

Now we can add multiple feedback loops to increase the data quality:

- As said initially, if possible the source system should provide immediate feedback about bad data.

- If the rules engine identifies doubtful data interesting to the user, it can either

- Trigger a workflow like “is this the correct address, yes or no?”

- Send a notification like “the order qty is unusually high, is it possible you wanted to enter 1.00tons instead of the 100tons?”

- All rule violations are stored in the Lakehouse and hence

- can be shown while building or viewing reports, to avoid drawing the wrong conclusions, e.g. “100% of my customers are male? Well, only 1% of my customers have a gender set!”

- can analyze the root cause, e.g. “Why was the country field empty for so many records? Oh, we connected a new system, seems we have a problem there!”

- can create data quality dashboards for management to show the problems and the trend.

- The system can get more or better rules easily.

- By being transparent where the data came from and what data problems have been uncovered, there is the constant incentive to get better.

This approach has multiple advantages for the business:

- The rules executed on a record are known

- The rule results of each individual rule is known

- The overall rule result and data quality level is known

- Based on the rule violation, data can be played back into the source system

- Based on the rule violation, the user can be notified immediately, while still working on the data

- Rule results is part of each row, hence can be reported and analyzed just like any other

- Not limited to the data quality dashboards the vendor provided, rather full BI tool flexibility

From an architectural point of view:

- No impact on the source system

- Thus easier to implement

- Thus more flexible

- AI is trained on the Lakehouse data and can utilize the rule results, e.g. train on valid data only

- Separation of concerns